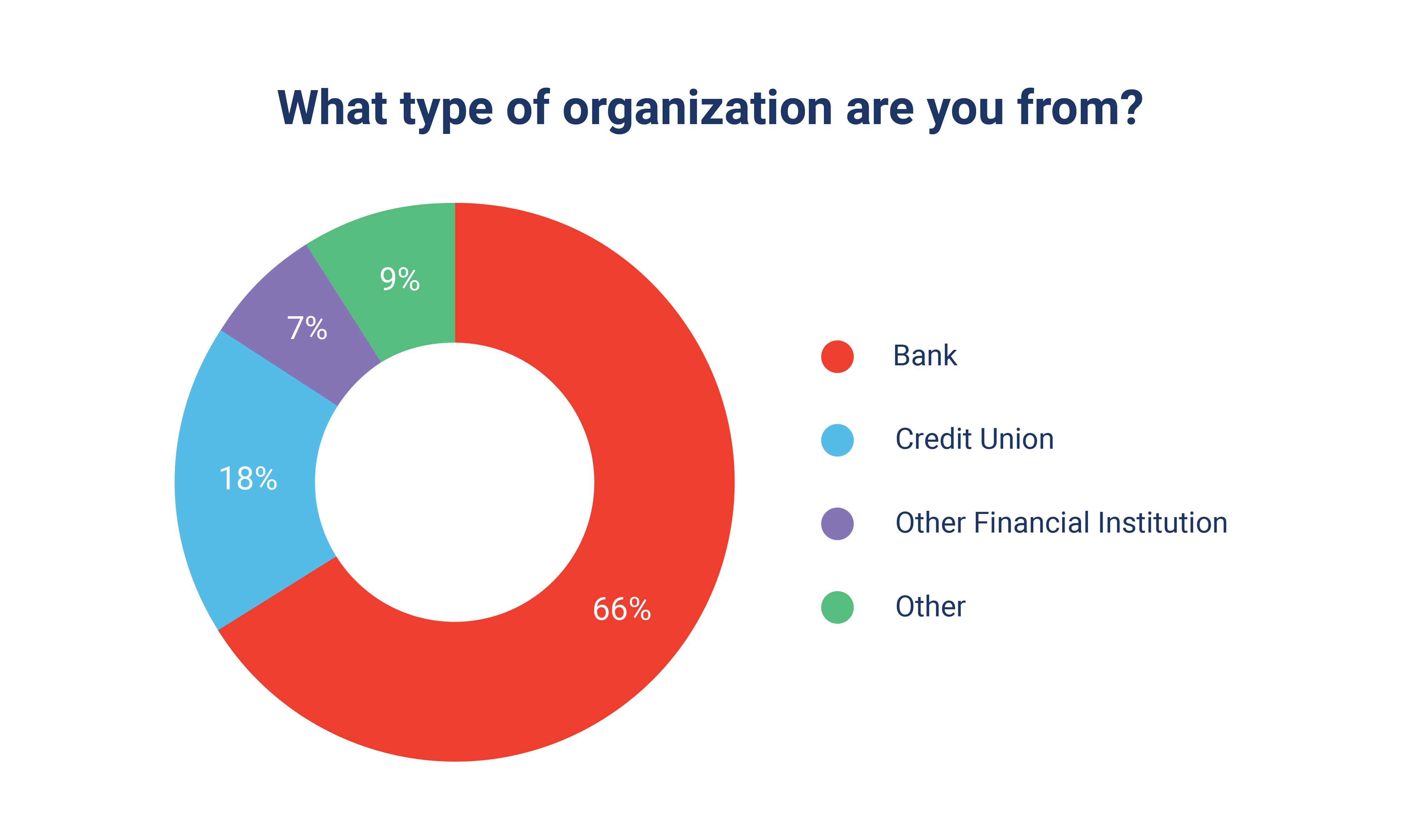

We recently hosted a webinar for information security professionals primarily at financial institutions on "How to Write an AI Policy." Over 200 attendees answered at least one of the poll questions during the webinar. The data from these questions give a look into how financial institutions are implementing polices and training on AI in the workplace.

Our webinar audience primarily consisted of professionals from financial institutions, with the majority representing banks (66%), followed by credit unions (18%). A smaller percentage came from other types of financial organizations (7%) and non-financial sectors (9%).

AI Policy Adoption

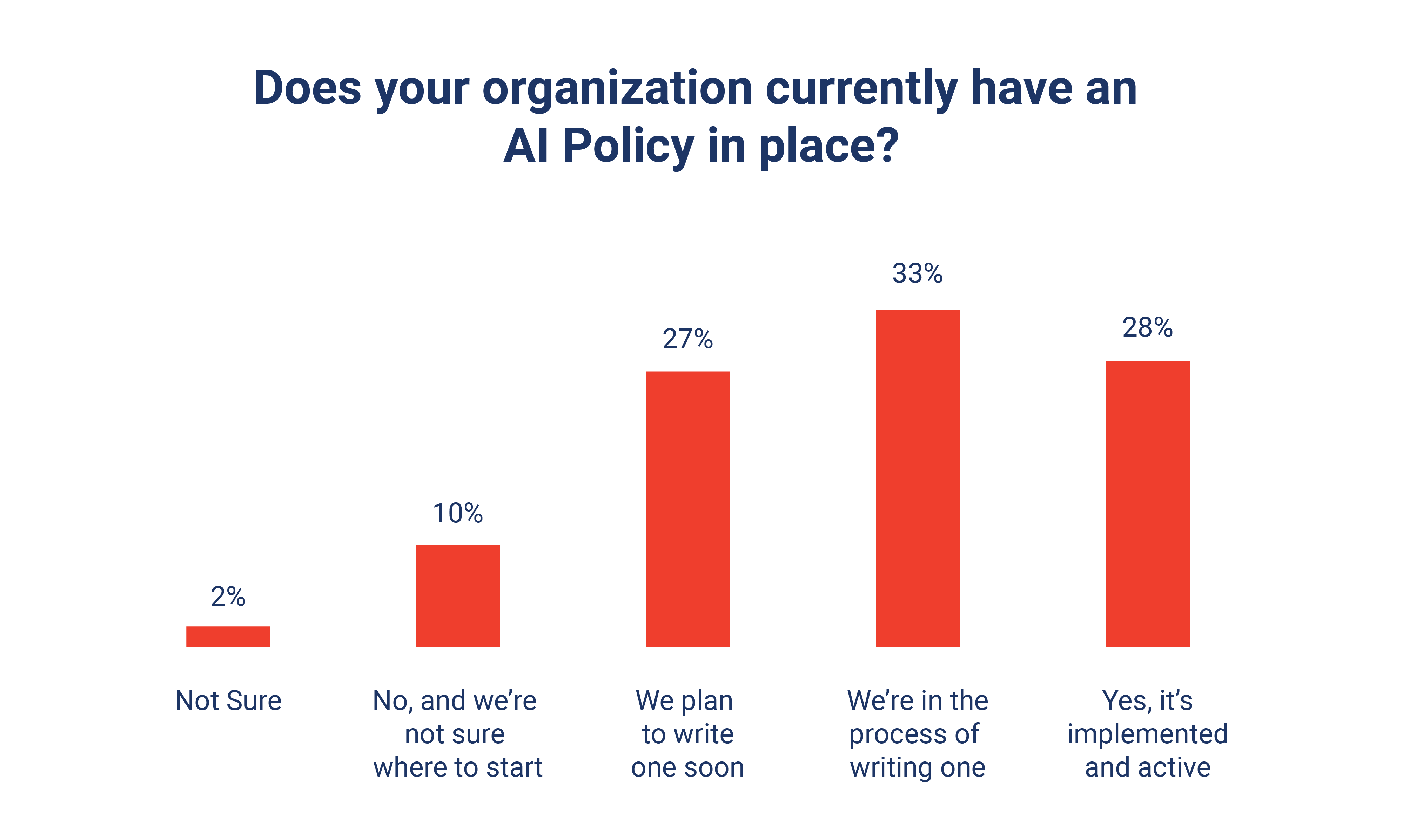

When we asked attendees if their organization currently has an AI policy in place, we found less than one-third have created an AI policy. Conversely, 60% are in the process of writing a policy or plan to have one in place soon.

During this poll, a participant commented that they "don't need one." While your organization may have opted to issue a policy not allowing AI in the workplace, the reality is that your employees are likely using it. This can come in the form of employees bringing in their own AI tools without express permission from management which can be termed "Shadow AI," or employees could even be using AI unknowingly. Software developers including Microsoft and Google are integrating AI into their software regardless of permission. Years ago, it was said that "Software is eating the world." Now it could be said that AI is eating the software world.

So, while your organization may not want to allow AI, it's recommended you create an AI policy and acceptable use policy for your organization, even if (and sometimes, especially if) your stance is AI avoidant.

If you fall into the category of "We're in the process of writing a policy," then be sure to check out our blog highlighting important things to consider when writing an AI policy.

How AI is Currently Being Used

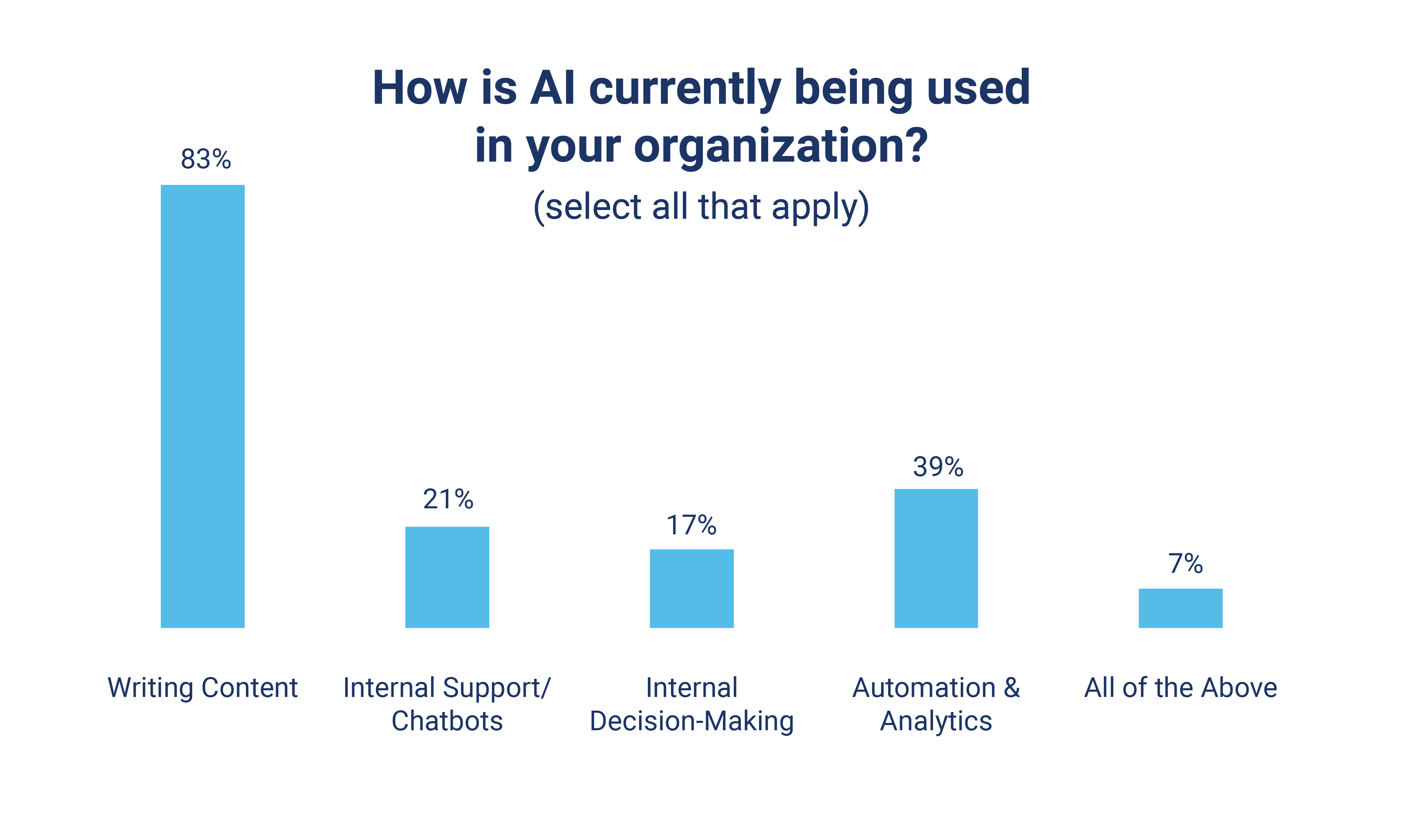

We also asked how AI is currently being used in attendees' organizations. The most selected response was for writing and content creation (79%) surpassing other uses like automation, chatbots, internal decision-making, or analytics.

This data suggests AI usage in financial institutions remains largely experimental and operational, rather than strategic or mission critical.

AI Oversight

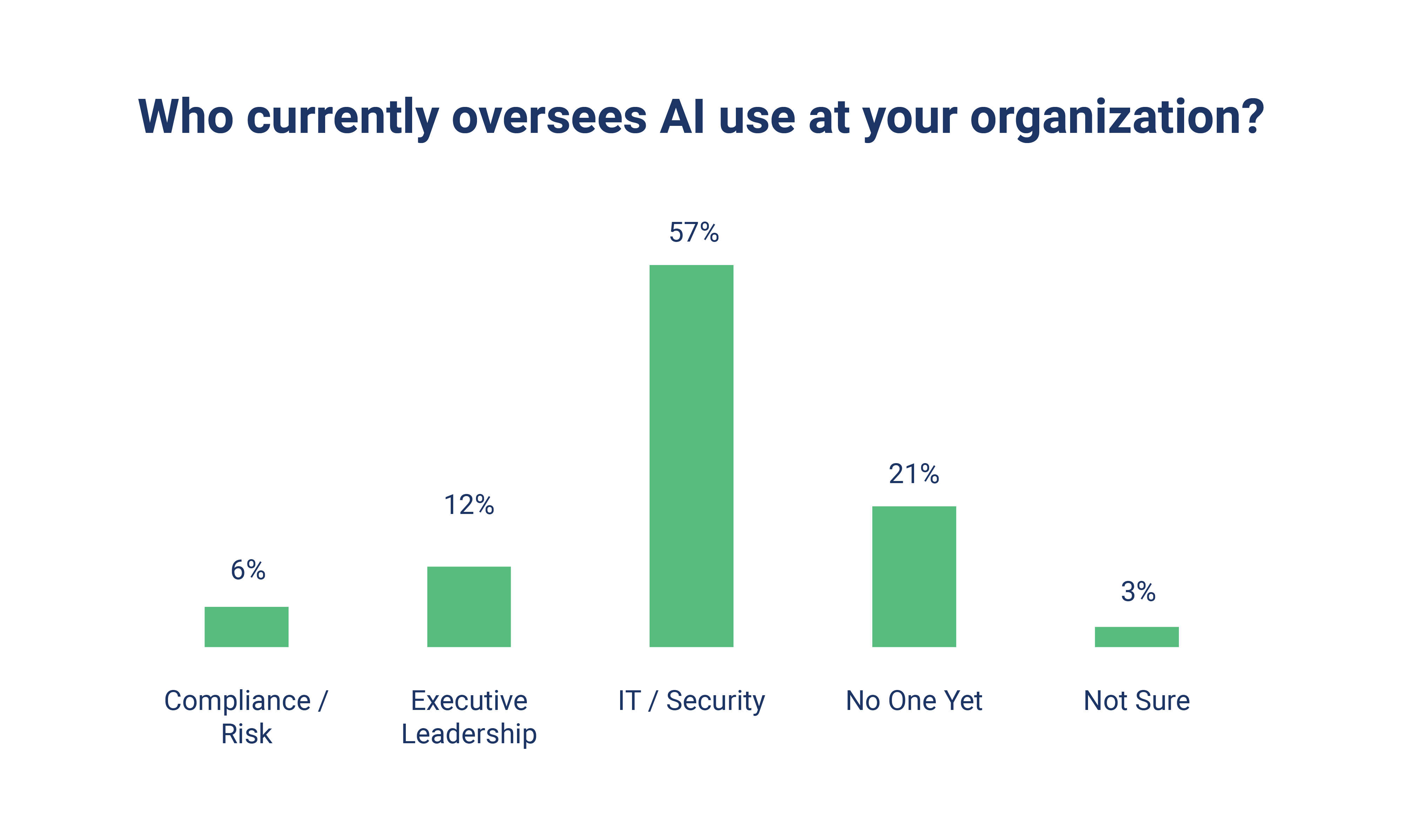

When asked, "Who currently oversees AI use at your organization," 57% of respondents said AI usage is currently overseen by the IT or security departments. Executive leadership and compliance/risk teams were far less likely to be involved.

Twenty-one percent of attendees said no one is overseeing AI at their institution yet. As AI tools become more embedded in day-to-day operations, they bring security risks and other enterprise risks such as legal, ethical, or technical risks.

A good starting point is assigning AI oversight to a trusted role, like your ISO or Network Administrator. If resources allow, involve others from compliance, IT, or risk management. The goal is clear accountability and ownership.

Including AI in Your Acceptable Use Policy

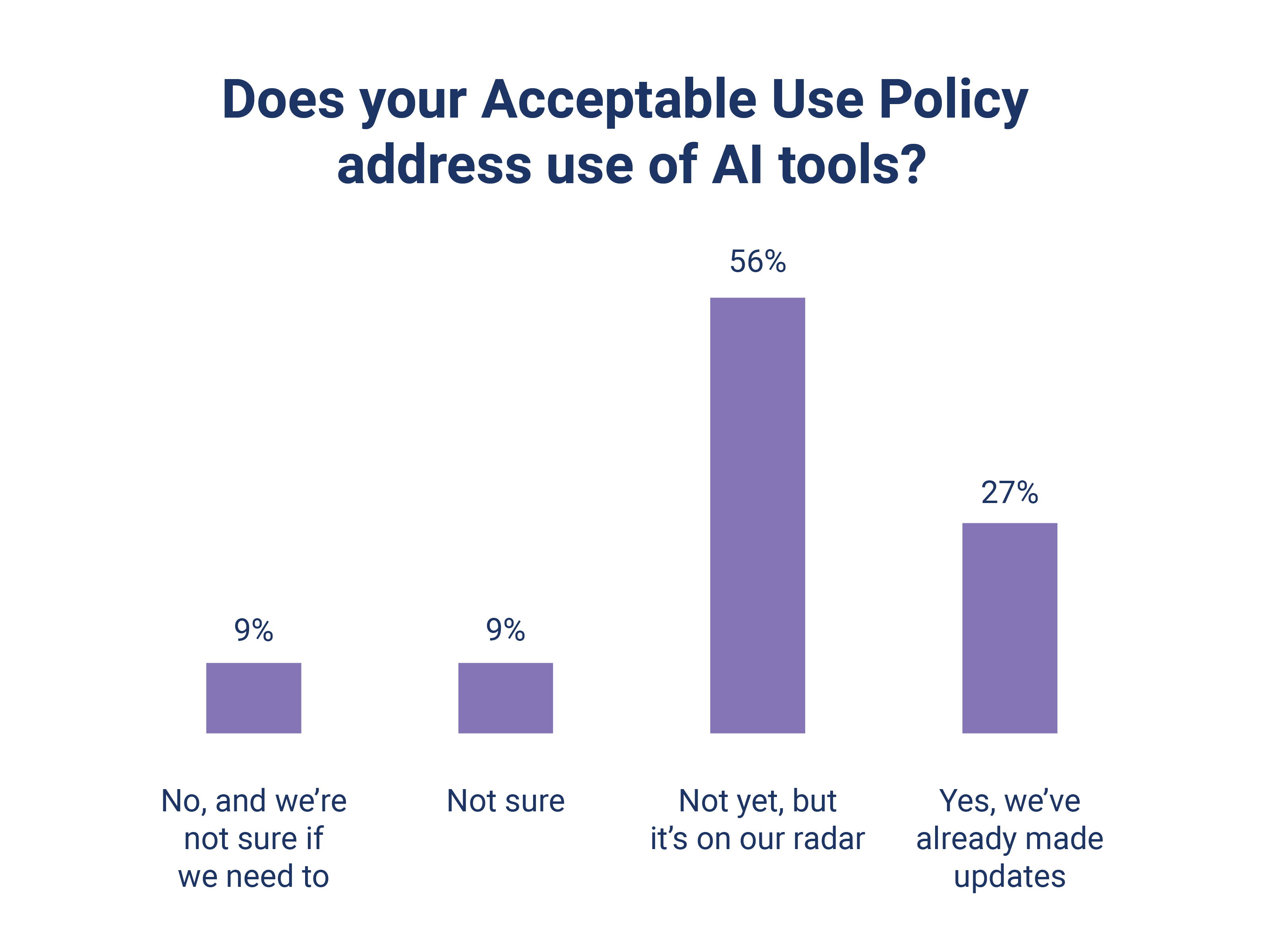

Another key area we explored was whether acceptable use policies (AUPs) have been updated to address AI.

While 31% of attendees reported their AUPs now explicitly cover AI tools, 63% said they have not yet made updates. Considering how rapidly employees are adopting AI tools in the workplace, it is important for organizations to clearly define what is and isn't acceptable, and it's equally important to communicate these boundaries.

Updating your AUP is an immediate step an institution can take to mitigate AI-related risk.

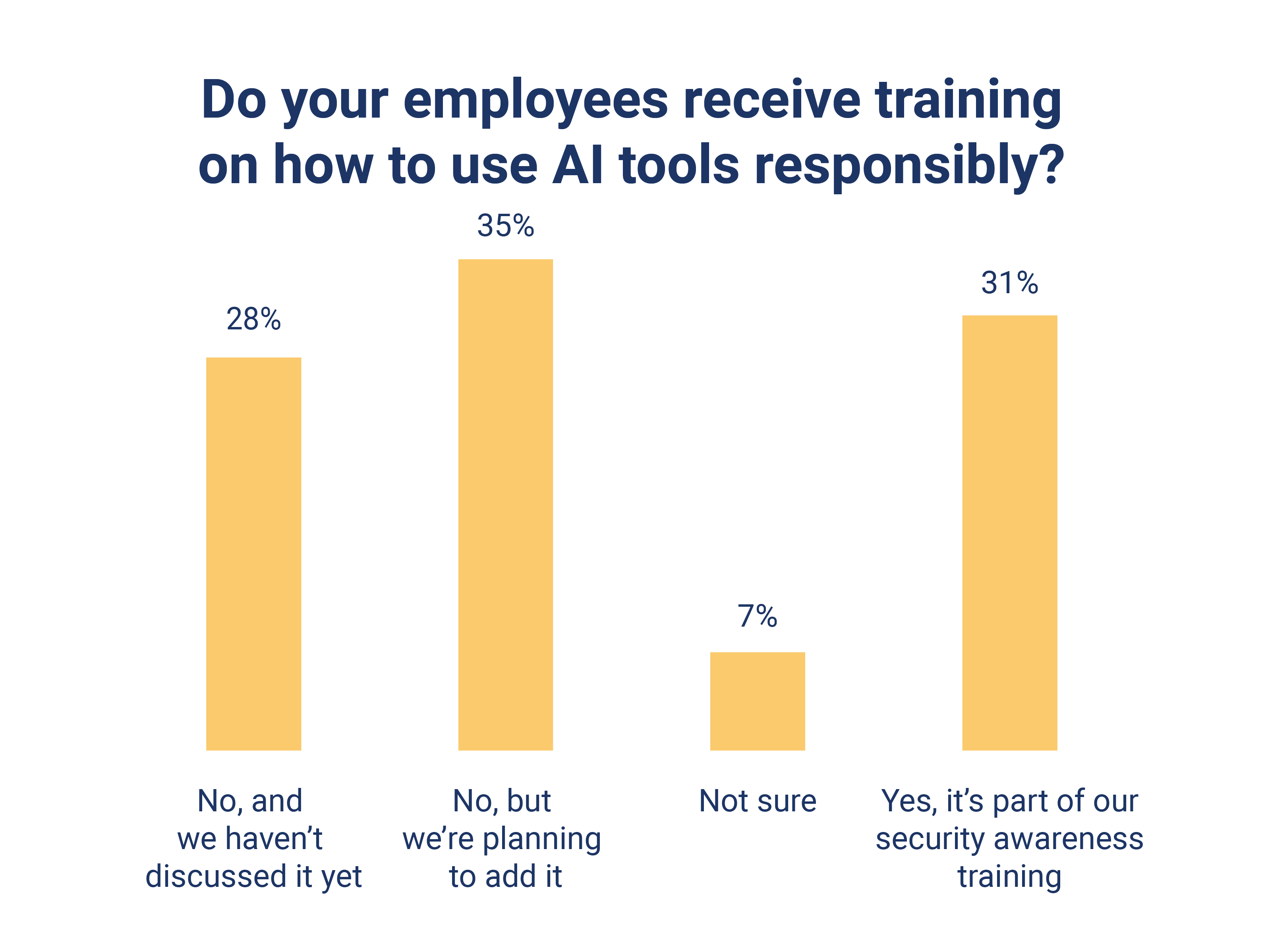

Training on AI

We asked whether employees receive training on how to use AI tools responsibly, and 31% said they include AI usage in their security awareness training. While 62% have not started training employees on responsible AI use, 35% said they will administer training soon.

AI training is a good opportunity to help reduce misuse, protect sensitive data, and ensure compliance with internal policies and external regulations. Even a brief training added to existing awareness training programs can make a meaningful difference.

Next Steps for Financial Institutions

The financial institution industry is headed in the right direction overall. Usage of AI is already widespread, especially in operational areas like content creation, and many organizations are beginning to write policies and plan training. For information security professionals, this is a great opportunity to shape how AI is used responsibly and securely within institutions.

If you are looking for a place to start with your AI policy, check out Tandem Policies. Tandem Policies provides a pre-written template for outlining AI guidelines and conducting security awareness training. An AI policy is just one of many policies offered and can jump start your information security program for your organization.