Deepfakes are quickly becoming one of the most dangerous tools in modern social engineering. With accessible AI tools, anyone can easily generate realistic voices, videos, and images that imitate real people, and attackers are using them to manipulate organizations.

How can we be prepared for attacks that look and sound this real? In this article, we'll explore how to train employees to recognize deepfakes quickly, verify unexpected requests, and report suspicious activity before it leads to serious damage.

What is a Deepfake?

This video was created using AI.

Just like the deepfake said, a "deepfake" is an audio, video, or image file made with AI to sound and/or look like a real person. Bad actors can use deepfakes to impersonate clients, colleagues, or even loved ones to gain your trust or convince you to take action.

How Deepfakes are Used

Deepfakes are often created to influence decisions, gain access, or steal information by imitating someone you trust. Here are some common ways deepfakes are being used:

- Executive Fraud: An employee receives a message or call that appears to come from the CEO urgently requesting a wire transfer or sensitive information. Because the voice and mannerisms seem genuine, it can feel difficult to question the request.

- Customer Service Scam: A caller sounds just like a real customer and urgently requests a password reset or funds transfer. The realistic tone and urgency can pressure employees to bypass normal verification steps.

- Synthetic Identity Fraud: A customer uses AI generated photos or voice verification to open fake accounts or credit lines. The identity appears real, but the fraudster disappears after maxing out the credit.

- Hiring Process Deception: During a remote interview, an applicant uses an AI-generated video to appear real under a false identity. Attackers use this tactic to secure employment, access systems, or gather insider information.

These scams are becoming increasingly common as AI tools advance. And it's not just happening at work, deepfakes are showing up in our personal lives, targeting friends and family, too.

Why We Believe Deepfakes

It's easy to believe that we'd never fall for a fake video, image, or phone call. But as social engineering tactics become more sophisticated, it's getting harder to spot a bad actor.

More specifically, when deepfakes enter the conversation, these tactics target how the human brain processes trust, emotion, and familiarity. Even the most security-aware employee can be fooled under the right conditions.

Here is a look at some common psychological triggers and how deepfakes take advantage of our natural tendencies.

| Psychological Trigger | Deepfake Impact |

| Truth Bias | Humans are wired to trust what feels familiar and confident. When we recognize a face, hear a known voice, or receive a message from someone in authority, our brain automatically flags it as "safe." Deepfakes exploit that reflex. |

| The Familiarity Effect | The more often we see or hear something, the more we believe it. Psychologists call this the "mere exposure effect." In practice, it means that if a deepfake video looks mostly right, our minds fill in the gaps and label it as authentic. We want to believe in the familiar, even if it's slightly off. |

|

Emotional Manipulation |

Strong emotion shuts down rational thinking. Attackers know that urgency, fear, or even praise can override our normal verification habits. A message that says "This is urgent, I need help now" doesn't just sound stressful; it triggers a physiological response. Your heart rate increases, your focus narrows, and your instinct becomes to act fast. Deepfakes amplify that manipulation by showing emotion, facial expressions, tone, or stress, that make the situation feel real. |

| Cognitive Overload | In today's workplace, most people are multitasking and juggling meetings, emails, and instant messages. When our brains are overloaded, we rely on shortcuts to make quick judgements, a process called heuristic thinking. Deepfakes exploit that fatigue. When you're under pressure, your brain scans for context clues (like a familiar face or voice) instead of doing deeper analysis. That's when red flags slip by unnoticed. |

Awareness isn't just about spotting deepfakes, it's about understanding how they manipulate us. By recognizing our own psychological triggers (trust, familiarity, emotion, and overload), we can give ourselves a moment to pause before reacting.

How to Spot a Deepfake

Just like other forms of social engineering, deepfakes are designed to feel real. They are not successful because they're perfect; they succeed because they're just convincing enough. Spotting a deepfake often comes down to noticing the small consistencies that don't quite match what we expect. Here are some key areas those red flags tend to show up.

Visual Cues

AI-generated videos can look impressively realistic but often struggle with the finer details of human movement and environment. When you slow down and look closely, you may spot clues that something's not right, like:

- Unnatural movements: Robotic blinking or awkward, inconsistent gestures

- Mismatched lighting or shadows: The face is lit differently from its surroundings

- Facial distortions: Warping or flickering when the person moves their head

- Overly smooth skin or hair: A plastic-like texture without pores, hair strands, or imperfections

- Changing background: Objects that warp or flicker when they shouldn't

If something feels too polished or slightly "off," don't ignore that instinct. Visual imperfections are often the first signs of a synthetic image or video.

Audio and Speech Cues

Even when a video looks good, the voice might tell a different story. Audio deepfakes mimic tone and phrasing, but they can't fully replicate natural human speech patterns.

Listen for clues like:

- Mismatched lip-syncing: Mouth movements that don't align with the words

- Mispronounced or odd phrasing: Words that sound unnatural or out of rhythm

- Repetitive cadence: A flat voice with the same tone, and no natural breathing or emotion

Real voices contain flaws, pauses, breaths, emotion, small stumbles, etc. Deepfakes often remove those imperfections, which ironically makes them easier to spot.

Behavioral Cues

Deepfakes don't just mimic a person's face or voice; they mimic their authority. That's why behavior clues are just as important as what you see and hear. Attackers rely on emotional pressure, context, and urgency to push people into reacting quickly.

Look for behaviors like:

- Unusual urgency: Pressure to complete a task or share information quickly

- Out-of-band request: Messages from unfamiliar numbers or calls outside approved channels

- Emotional manipulation: Excessive stress, flattery, or intimidation to influence your response

- Deflecting: The person avoids certain topics, cannot answer questions, or provides inconsistent details

Behavioral red flags matter most because deepfakes often target our emotions first. Urgency and fear override critical thinking, making us more likely to comply without verifying.

Responding to Deepfakes

Spotting a deepfake is only half the battle, knowing what to do next is just as important. If something feels unusual, the worst thing you can do is shrug it off. Deepfakes are intentionally designed to sound and look real, so if you spot a red flag, trust your instincts.

When in doubt, you don't need special tools or technical skills. You just need a simple, repeatable response.

- Pause. Deepfakes thrive on urgency. The attacker wants you to feel like you have to act right now, approve the transfer, reset the password, share the document, or jump on the call. The most powerful thing you can do is slow down. If a request feels unexpected, high-pressure, or out of character, don't be afraid to press pause.

- Verify. Follow your organization's trusted plans, like your callback procedures or Identity Theft Prevention Program. The goal is to verify requests through a trusted channel. This might look like hanging up and calling the person directly using a known number, communicating through another trusted out-of-band channel, or checking with your manager before moving forward. A 30-second verification can stop a problem that would take weeks to unwind.

- Report. If something seems suspicious, even if you're not completely sure, report it to your supervisor or to your IT and security team. Provide screenshots, emails, or call details so they can investigate. Once reported, follow your organization's established communication and incident response procedures. Avoid forwarding suspicious content to coworkers, posting about it, or trying to handle it alone. Your organization's policies are designed to contain incidents, protect sensitive information, and preserve evidence if a deeper investigation is needed.

You don't have to be perfect, and you don't have to be an expert in AI. You just have to be willing to pause, verify, and speak up when something doesn't feel right.

Staying Secure in a Deepfake World

Deepfakes are no longer just internet gimmicks, they're quickly becoming one of the most sophisticated tools used in fraud, impersonation, and social engineering. As AI advances, the line between what's real and what's fake will continue to blur. But even as the technology becomes more convincing, our best defense doesn't change.

It starts with awareness, careful thinking, and the willingness to verify before we react.

Throughout this guide, you've learned:

- What deepfakes are and the many forms they take.

- How attackers use them to create pressure, evoke trust, or exploit emotion.

- Why we fall for them, and the psychological triggers that make fake seem real.

- How to spot, respond to, and report deepfakes using simple, reliable steps.

Technology will always evolve, but so can we. When we slow down, think critically, and question what doesn't feel right, we protect not only ourselves, but the entire organization.

Stay curious. Stay cautious. Stay aware.

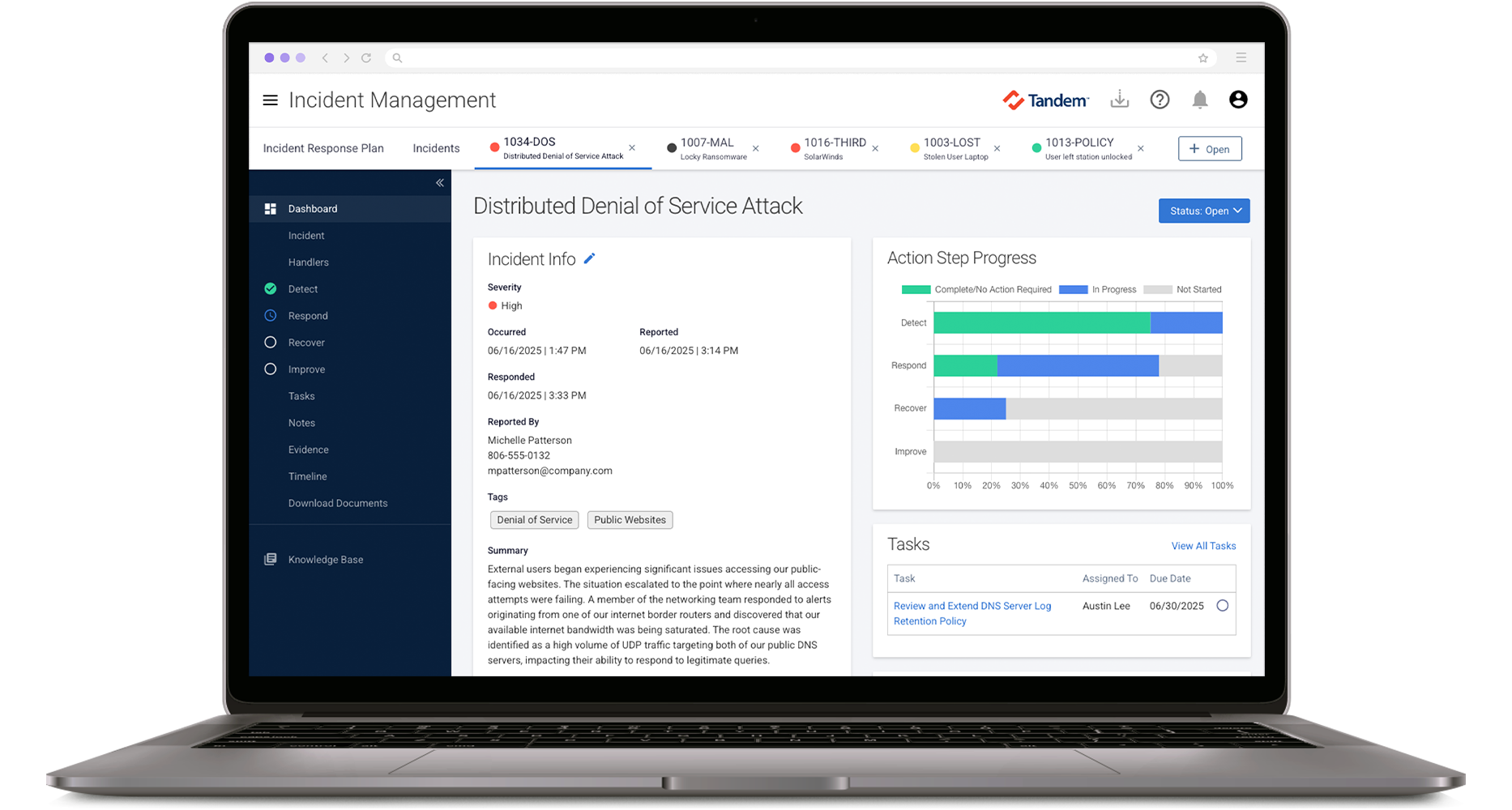

Take the Next Step with Tandem

Want to take the next step? Tandem Incident Management features a dedicated Deepfake Awareness Training course designed to help employees recognize AI-generated impersonation before it leads to fraud.

Pair it with our Deepfake Tabletop Scenarios, and your team can practice how to detect, respond, and recover when deepfakes show up in real world situations.

See how Tandem can help you at Tandem.App.